Google's Research Paper On Secure AI Agents

- Chandan Rajpurohit

- 5 days ago

- 3 min read

Updated: 4 days ago

Believe it or not AI is here to stay.

In 2025, we saw a huge spike of Agentic AI Application and AI Agents. Now the question arise is how secure this AI Agents and Applications are?

I was reading a research paper by Santiago Díaz, Christoph Kern, Kara Olive from Google in which they presented Google’s Approach for Secure AI Agents.

In the Introduction, Google did presented and shared the potential and risks associated with the AI Agents and did mentioned the need for Agent Security in AI Agents. The key risks identified by Google are rogue actions (unintended, harmful, or policy-violating actions) and sensitive data disclosure (unauthorized revelation of private information).

Building on well-established principles of secure software and systems design, and in alignment with Google’s Secure AI Framework (SAIF), Google is advocating for and implementing a hybrid approach, combining the strengths of both traditional, deterministic controls and dynamic, reasoning-based defenses. This creates a layered security posture—a “defense-in-depth approach”—that aims to constrain potential harm while preserving maximum utility. - Google’s Approach for Secure AI Agents: An Introduction

Google did explained the common agent architecture with the above risks (rogue actions & sensitive data disclosure) associated with each component of the AI Agents.

Components of the AI Agents

Input, perception and personalization

System instructions

Reasoning and planning

Orchestration and action execution (tool use)

Agent memory

Response rendering

Google propose to adopt three core principles for agent security

Principle 1: Agents must have well-defined human controllers

Principle 2: Agent powers must have limitations

Principle 3: Agent actions and planning must be observable

A summary of agent security principles, controls, and high-level infrastructure needs

Principle | Summary | Key Control Focus | Infrastructure Needs |

1. Human controllers | Ensures accountability, user control, and prevents agents from acting autonomously in critical situations without clear human oversight or att ribution. | Agent user controls | Distinct agent identities, user consent mechanisms, secure inputs |

2. Limited powers | Enforces appropriate, dynamically limited privileges, ensuring agents have only the capabilities and permissions necessary for their intended purpose and cannot escalate privileges inappropriately. | Agent permissions | Robust Authentication, Authorization, and Auditing for agents, scoped credential management, sandboxing |

3. Observable actions | Requires transparency and auditability through robust logging of inputs, reasoning, actions, and outputs, enabling security decisions and user understanding. | Agent observability | Secure/centralized logging, characterized action APIs, transparent UX |

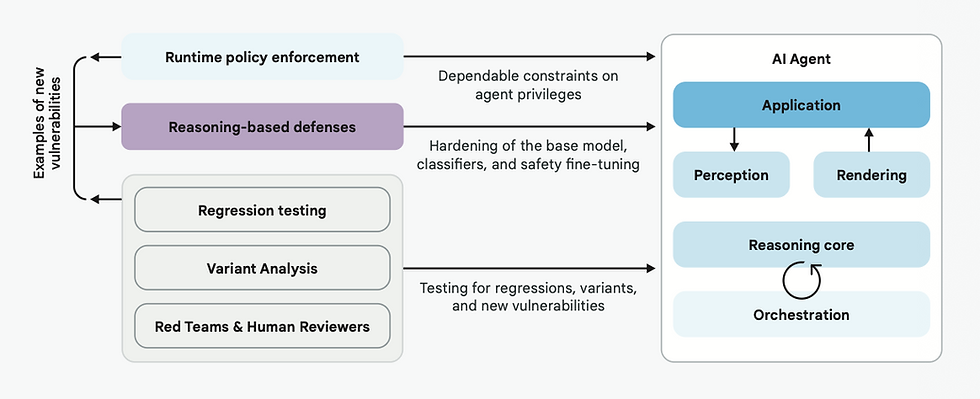

Google’s approach: A hybrid defense-in-depth

Google's approach combines traditional, deterministic security measures with

dynamic, reasoning-based defenses.

Layer 1: Traditional, deterministic measures (runtime policy enforcement)

The first security layer utilizes dependable, deterministic security mechanisms, which Google calls policy engines, that operate outside the AI model’s reasoning process. These engines monitor and control the agent’s actions before they are executed, acting as security checkpoints.

Layer 2: Reasoning-based defense strategies

To complement the deterministic guardrails and address their limitations in handling context and novel threats, the second layer leverages reasoning-based defenses: techniques that use AI models themselves to evaluate inputs, outputs, or the agent’s internal reasoning for potential risks.

Google mentioned techniques like adversarial training, specialized guard models, additionally, models can be employed for analysis and prediction.

AI Agents are the next big thing in technology and rather than holding back we should embrace it with the required security standards and framework.

I appreciate the research and work done at Google and Google DeepMind for the advancement of the safe and secure AI Systems.

Read more about Google’s Secure AI Framework at saif.google.

Comments